User Acceptance Testing has always been the final hurdle before software goes live. End-users frantically click through workflows, business stakeholders scramble to provide feedback, and project managers pray nothing critical gets missed in the production rush.

Traditional UAT burns through budgets, delays releases, and still lets bugs slip through. How AI changes User Acceptance Testing isn't just about automation; it's about transforming the most unpredictable phase of software delivery into a strategic advantage.

In this article, we'll explore:

- How AI is revolutionizing traditional UAT processes and eliminating common bottlenecks

- The specific ways artificial intelligence enhances test coverage while reducing manual effort

- A practical framework for implementing AI-powered UAT without disrupting existing workflows

AI transforms UAT from reactive testing to predictive quality assurance

The traditional UAT approach waits until the end of development to validate whether software meets user needs. AI flips this model entirely by enabling continuous validation throughout the development lifecycle.

Instead of hoping users will find time to test comprehensively, AI analyzes user behavior patterns, generates realistic test scenarios, and identifies potential issues before human testers even see the system.

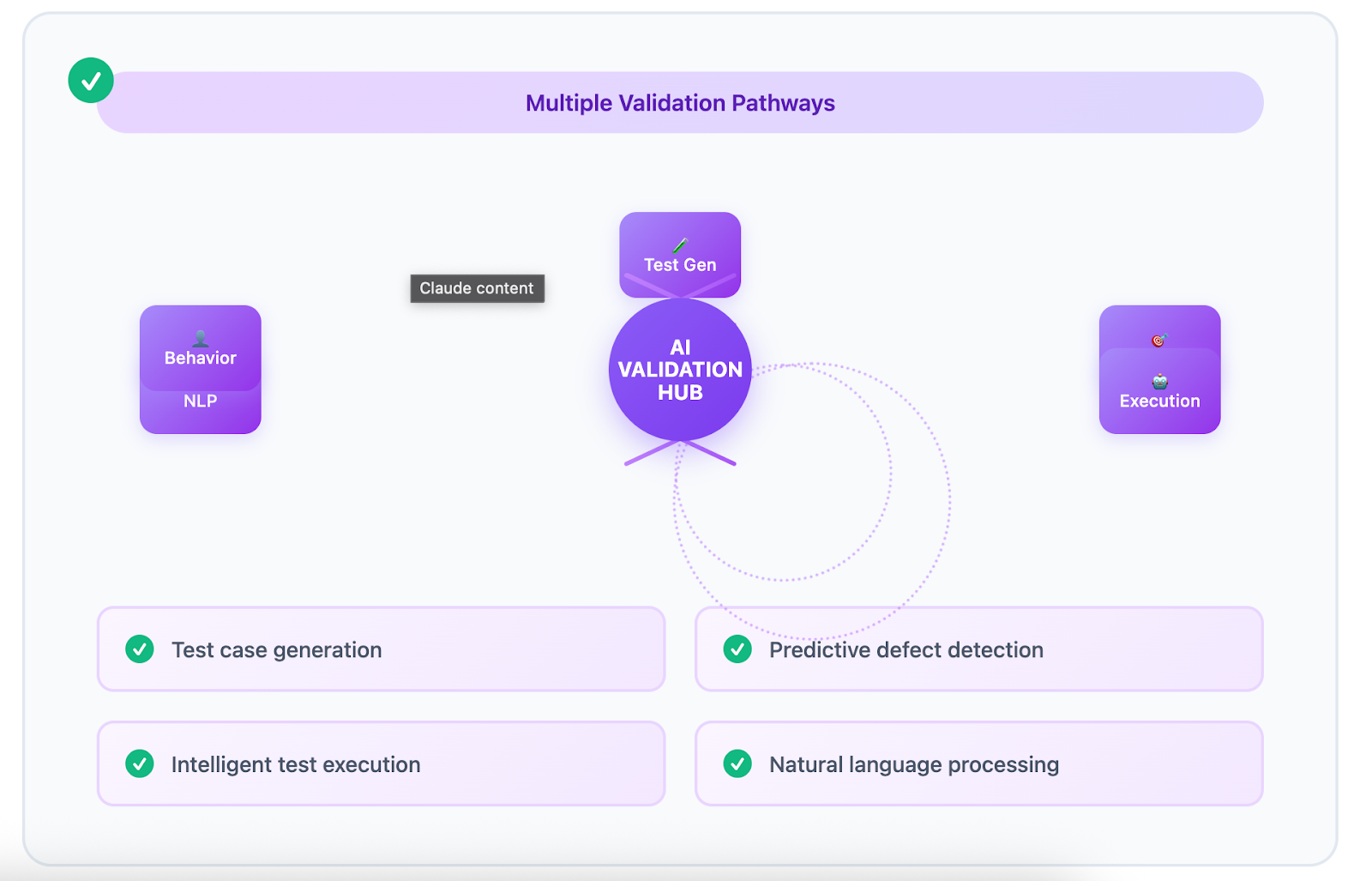

Key transformation areas:

- Test case generation: AI creates comprehensive test scenarios from requirements, user stories, and historical data

- Predictive defect detection: Machine learning identifies high-risk areas based on code changes and usage patterns

- Intelligent test execution: Automated agents simulate real user behavior across different workflows and environments

- Natural language processing: AI converts plain English requirements into executable test cases

The impact is measurable: Organizations implementing AI-powered UAT report 20-30% faster testing cycles and around 30% higher defect detection rates compared to traditional manual approaches.

Rather than replacing human testers, AI amplifies their effectiveness by handling repetitive tasks and surfacing insights that would take weeks to discover manually.

Where AI excels in User Acceptance Testing

Artificial intelligence doesn't just speed up existing UAT processes; it solves fundamental problems that have plagued acceptance testing for decades. Here's where AI creates the most significant impact.

Automated test case generation from requirements

One of the biggest UAT bottlenecks is creating comprehensive test scenarios that cover real-world usage. AI eliminates this challenge by analyzing requirements documents, user stories, and business process descriptions to generate detailed test cases automatically.

What AI can create from your requirements:

- Complete user workflows with realistic data variations

- Edge case scenarios based on historical defect patterns

- Cross-functional test cases that span multiple system components

- Regression tests that validate existing functionality isn't broken

Pro Tip: AI-generated test cases include traceability back to original requirements, ensuring nothing gets missed and compliance requirements are automatically satisfied.

Intelligent defect prediction and risk assessment

Traditional UAT finds problems after they've already been built. AI-powered systems predict where defects are most likely to occur before testing even begins, allowing teams to focus their limited testing time on the highest-risk areas.

AI prediction capabilities:

- Code complexity analysis that identifies modules prone to user-facing bugs

- Historical defect pattern matching across similar features

- Integration risk assessment based on system dependencies

- Performance bottleneck prediction under realistic load conditions

Real-world example: A financial services company used AI to analyze its mobile banking app updates. The system correctly predicted that new authentication flows would cause user confusion, allowing the team to redesign the interface before UAT began.

Dynamic test execution with adaptive behavior

Unlike rigid test scripts that break when UI elements change, AI agents adapt to application modifications and continue testing seamlessly. This dramatically reduces test maintenance overhead while improving coverage.

Adaptive testing features:

- Self-healing test scripts that automatically adjust to interface changes

- Dynamic data generation that creates realistic test scenarios on demand

- Multi-browser and device testing without separate script maintenance

- Intelligent waiting and retry mechanisms that handle asynchronous operations

Contextual insights and root cause analysis

When defects are discovered, AI provides detailed context about why issues occurred and how they impact the overall user experience. This accelerates fix implementation and prevents similar problems in future releases.

AI-powered analysis includes:

- Impact assessment showing which user workflows are affected

- Root cause identification linking defects to specific code changes

- Similar defect detection across the application

- Recommended fix strategies based on historical resolution patterns

Where human expertise remains essential

Despite AI's powerful capabilities, successful UAT still requires human judgment and oversight. Understanding these boundaries helps teams implement AI effectively while maintaining quality standards.

Business context and subjective evaluation

AI excels at functional validation but struggles with subjective aspects of user experience that require human intuition and business understanding.

Human expertise is critical for:

- Evaluating whether workflows align with actual business processes

- Assessing user interface aesthetics and emotional responses

- Validating that software meets unwritten cultural and organizational expectations

- Making final acceptance decisions based on business risk tolerance

Stakeholder communication and change management

UAT involves complex stakeholder dynamics that require emotional intelligence and communication skills that AI cannot replicate.

Essential human activities:

- Explaining technical issues to non-technical stakeholders

- Managing conflicting feedback from different user groups

- Negotiating scope changes and timeline adjustments

- Building consensus around acceptance criteria

Complex exception handling

While AI handles standard test scenarios effectively, unusual edge cases and system failures often require human creativity and problem-solving skills.

Human intervention needed for:

- Investigating unexpected system behavior that falls outside trained patterns

- Designing tests for completely new features without historical data

- Handling integration issues with external systems

- Making judgment calls about acceptable vs. unacceptable defects

The most successful AI-powered UAT implementations use human-AI collaboration, where artificial intelligence handles routine validation while humans focus on strategic decision-making and complex problem-solving.

The hybrid UAT model: AI intelligence with human oversight

The future of User Acceptance Testing isn't purely automated or entirely manual. Smart teams are building hybrid approaches that leverage AI's efficiency while preserving essential human judgment.

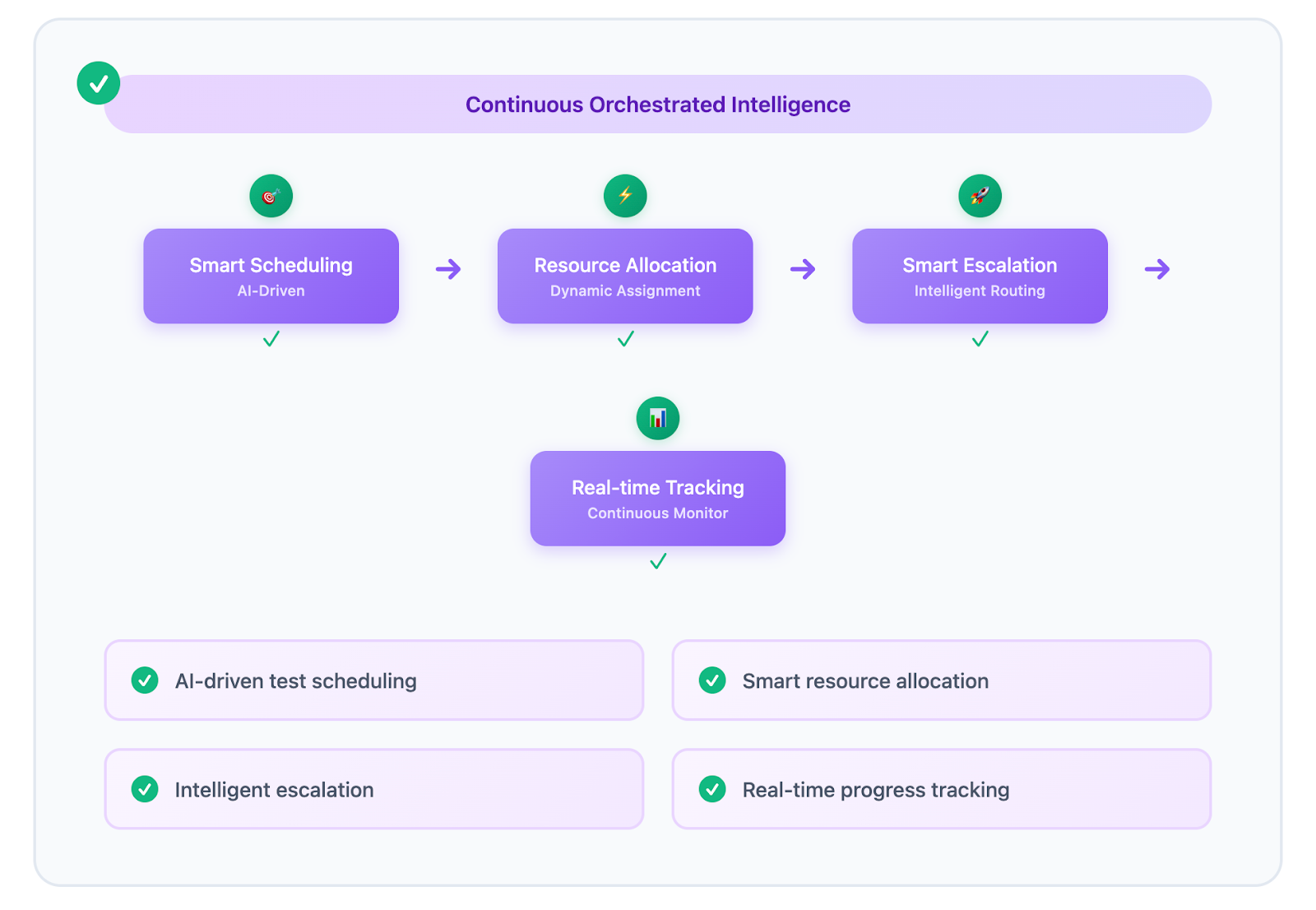

Intelligent test orchestration

AI manages the logistics of UAT execution while humans focus on analysis and decision-making. This creates a seamless workflow that maximizes both efficiency and quality.

Hybrid orchestration includes:

- AI-driven test scheduling: Automatic test execution based on code changes and deployment schedules

- Smart resource allocation: Dynamic assignment of test cases to human testers based on expertise and availability

- Intelligent escalation: Automated routing of complex issues to appropriate team members

- Real-time progress tracking: Continuous monitoring of test completion and quality metrics

Enhanced feedback collection and analysis

Traditional UAT feedback is often scattered across emails, spreadsheets, and verbal communications. AI centralizes and analyzes this feedback to identify patterns and prioritize fixes.

AI-enhanced feedback management:

- Natural language processing that categorizes user feedback automatically

- Sentiment analysis to gauge user satisfaction levels

- Priority scoring based on business impact and technical complexity

- Automated assignment of feedback items to development teams

Predictive UAT planning

Rather than guessing how long UAT will take or what resources are needed, AI analyzes historical data and current project characteristics to provide accurate estimates.

AI-powered planning delivers:

- Realistic timeline predictions based on feature complexity and team capacity

- Resource requirement forecasting, including tester availability and skill needs

- Risk assessment highlighting potential UAT bottlenecks before they occur

- Optimization recommendations for test environment setup and data preparation

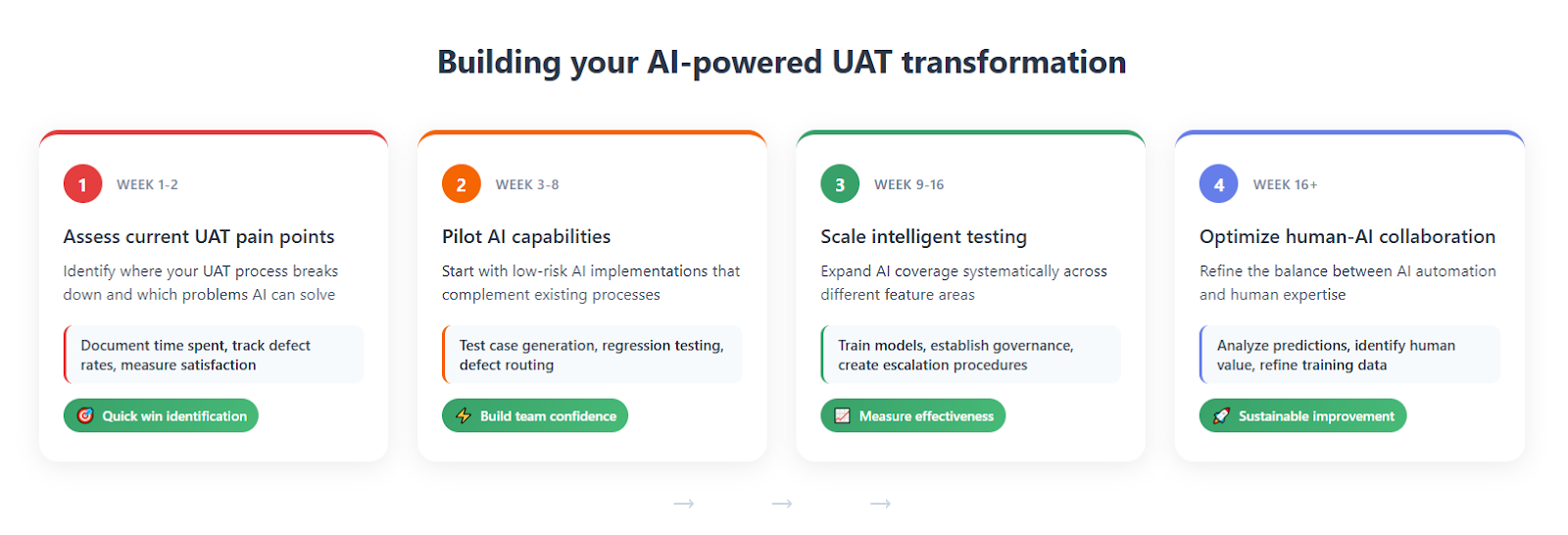

Building your AI-powered UAT transformation

Ready to evolve beyond traditional acceptance testing limitations? This systematic approach helps teams implement AI capabilities while maintaining the stakeholder confidence that UAT success requires.

Phase 1: Assess current UAT pain points (Week 1-2)

Before adding AI capabilities, identify exactly where your current UAT process breaks down and which problems AI can solve most effectively.

UAT assessment framework:

- Document time spent on test case creation vs. actual testing

- Track defect discovery rates across different types of testing

- Measure stakeholder satisfaction with current feedback turnaround times

- Identify recurring bottlenecks that delay release approvals

Quick wins identification: Look for repetitive tasks that consume significant time but don't require human judgment. These become your first AI automation candidates.

Phase 2: Pilot AI capabilities in controlled scenarios (Week 3-8)

Start with low-risk AI implementations that complement existing processes rather than replacing them entirely. This builds team confidence while demonstrating tangible value.

Ideal pilot scenarios:

- Automated test case generation for well-defined feature requirements

- AI-powered regression testing to validate existing functionality

- Intelligent defect categorization and routing

- Predictive analytics for test coverage assessment

Implementation strategy: Run AI-generated tests alongside manual testing initially, comparing results to build trust in AI accuracy and identify areas for refinement.

Phase 3: Scale intelligent testing across feature areas (Week 9-16)

Once pilot results prove AI effectiveness, expand coverage systematically across different types of features and testing scenarios.

Scaling considerations:

- Train AI models on your specific application and user behavior patterns

- Establish governance processes for AI-generated test cases and results

- Create escalation procedures for handling AI-identified issues

- Develop metrics to measure AI contribution to overall UAT effectiveness

Success metrics to track:

- Reduction in test case creation time

- Increase in defect detection before user testing

- Improvement in stakeholder satisfaction with UAT outcomes

- Decrease in production issues post-release

Phase 4: Optimize the human-AI collaboration model (Week 16+)

Use data and feedback to refine the balance between AI automation and human expertise, creating a sustainable model that improves over time.

Optimization strategies:

- Analyze which AI predictions prove most accurate and expand those capabilities

- Identify tasks where human oversight adds the most value

- Refine AI training data based on real-world outcomes

- Establish continuous improvement processes for AI model performance

Pro Tip: Start with feature areas that have clear requirements and predictable user workflows. Complex, creative features may require more human oversight initially while AI models learn your specific context.

Choose intelligence over instinct in the UAT strategy

User Acceptance Testing doesn't have to be the chaotic final sprint before production. AI transforms UAT from reactive problem-finding into proactive quality assurance that prevents issues before they impact users.

The smartest teams don't treat UAT as an isolated process. They integrate intelligent testing capabilities within their broader project management workflows, creating systems where UAT insights inform sprint planning, resource decisions, and stakeholder communications seamlessly.

Stop hoping your software will work for end users. The question isn't whether to implement AI in your UAT process. It's how quickly you can start building intelligent systems that ensure your software will work, every time.

.png)

_light%201.png)