In 1969, the Waterfall model was used to guide software for the U.S. Department of Defense, long before agile even existed. Decades later, nearly 80% of large-scale projects in regulated industries still rely on it.

The challenge is making this rigid framework smarter. That’s why teams are exploring how to integrate AI into Waterfall projects to add foresight, automation, and speed into every phase.

In this article, we will:

- Show you the complete roadmap to smarter AI waterfall integration

- Guide you on setting essential governance controls for success

- Help you prove AI value with ROI and performance tracking

AI waterfall integration: Your complete roadmap to smarter project management

Integrating artificial intelligence into traditional waterfall methodology enhances each sequential phase with intelligent automation and predictive capabilities. This transforms rigid processes into adaptive, data-driven workflows while preserving the waterfall's structured approach.

Ready to revolutionize your waterfall projects? Let's dive into the step-by-step implementation process.

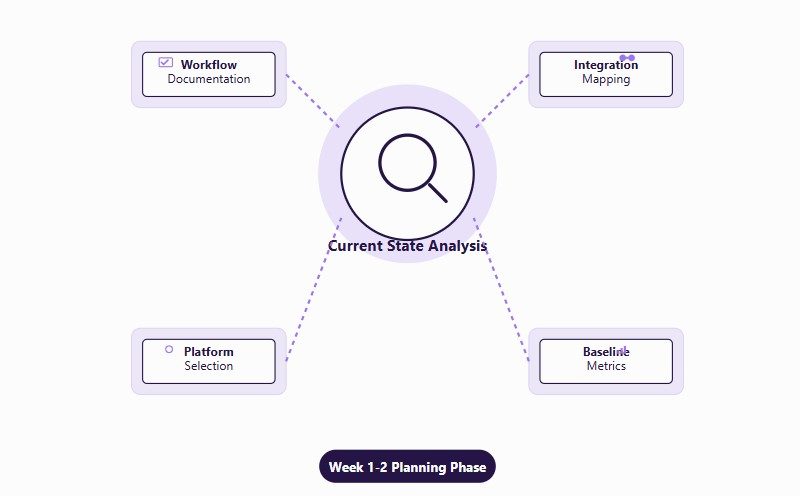

Step 1: Pre-integration assessment and planning (week 1-2)

The foundation of successful AI integration starts with understanding your current processes and identifying strategic enhancement opportunities. This critical planning phase ensures smooth implementation without disrupting your established workflows.

Key actions to complete:

- Document existing requirements gathering, design, implementation, testing, and maintenance workflows

- Map specific integration points where AI can enhance each phase without breaking the sequential structure

- Choose appropriate AI platforms (Microsoft Project with AI Copilot, ClickUp Brain, or Forecast) based on project complexity

- Record baseline metrics, including timeline accuracy, resource utilization rates, and defect discovery timelines

Pro tip: Start with a comprehensive process audit using flowcharts to visualize where AI can add the most value. This visual mapping helps stakeholders understand the integration strategy and reduces resistance to change.

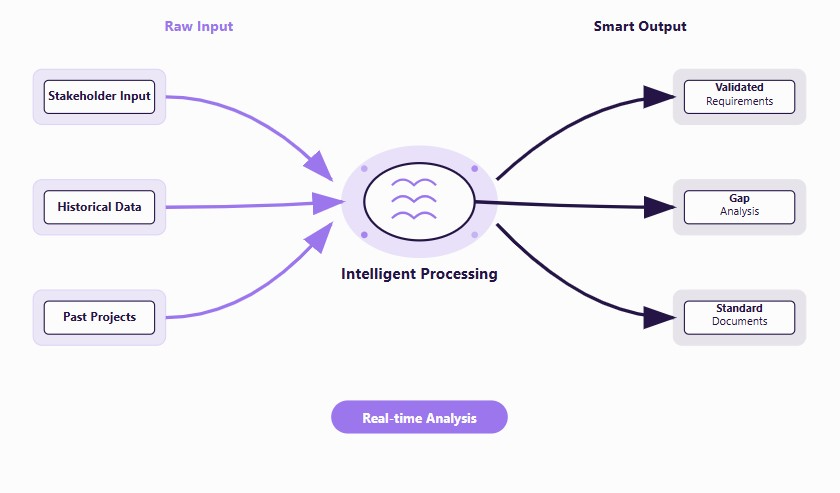

Step 2: Requirements phase AI integration (immediate implementation)

Transform your requirements gathering from reactive documentation to proactive intelligence. AI-powered tools can capture nuanced stakeholder needs and predict potential gaps before they become costly problems.

Implementation checklist:

- Deploy NLP tools like Otter.ai for stakeholder interview transcription and analysis

- Feed historical project data into machine learning models to identify missing requirements

- Set up automated requirement validation against similar past projects

- Use AI writing tools (Jasper or ChatGPT) to generate standardized requirement documents

Smart teams are discovering that AI transcription services don't just save time—they capture subtle requirements that human note-takers often miss. The AI analyzes conversation patterns and highlights potential conflicts or ambiguities in real-time.

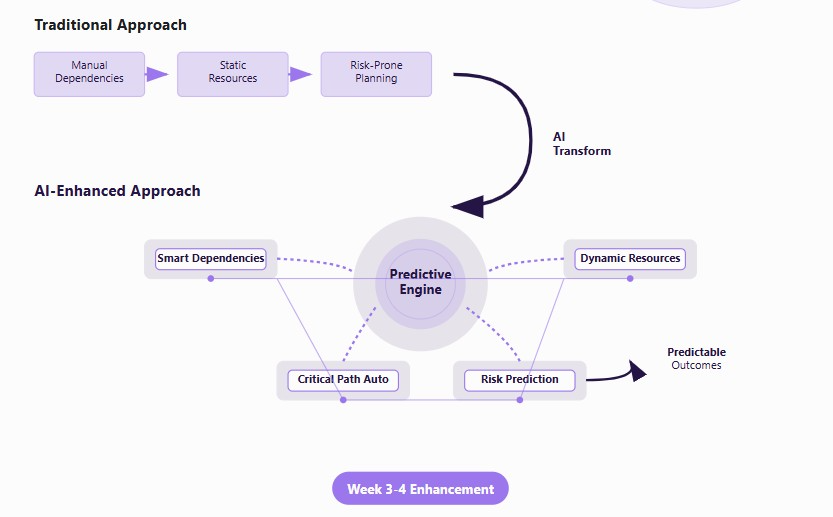

Step 3: Design phase AI enhancement (week 3-4)

The design phase becomes significantly more predictable when AI algorithms handle complex dependency mapping and resource optimization. This is where traditional waterfall planning meets modern predictive capabilities.

Critical implementation steps:

- Install Microsoft Project with Copilot or LiquidPlanner for dynamic timeline creation

- Configure AI dependency mapping to automatically identify task relationships and critical paths

- Deploy resource allocation tools that analyze team capacity, skills, and availability

- Implement risk prediction models using historical project data

Pro tip: The design phase is where AI integration pays the biggest dividends in waterfall projects. Unlike agile methodologies, where changes are expected, waterfall's sequential nature means design errors compound throughout the project. Investing extra time in AI-powered design validation during weeks 3-4 prevents costly rework in later phases.

Step 4: Implementation phase automation (week 5-8)

The implementation phase benefits most from real-time monitoring and predictive bottleneck detection. AI transforms reactive project management into proactive problem-solving.

Automation priorities:

- Deploy ClickUp Brain or Motion within an IT project management system to enable automated task scheduling based on dependencies.

- Set up AI dashboards for progress tracking with automatic milestone alerts

- Configure quality gates for automatic code quality and documentation checks

- Implement predictive systems that analyze work patterns to prevent delays

Teams report that automated quality gates catch 60% more issues during implementation compared to manual reviews. The AI learns from each project, continuously improving its ability to predict and prevent common problems.

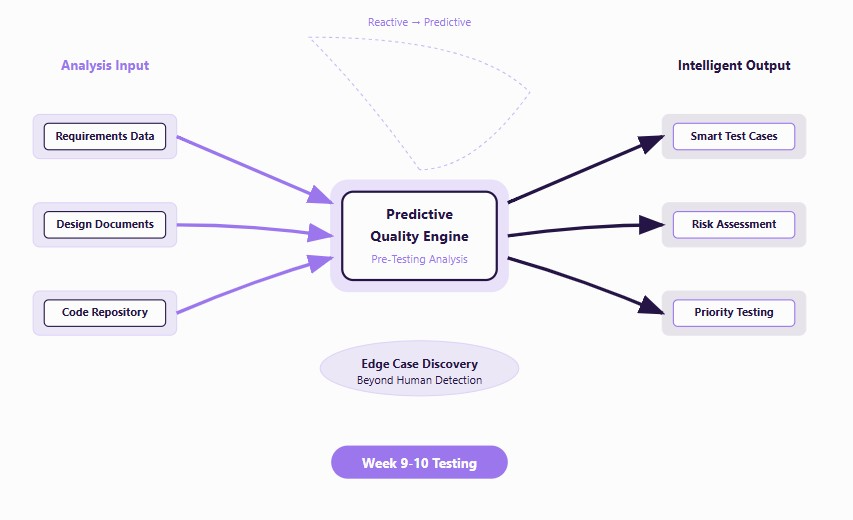

Step 5: Testing phase AI integration (week 9-10)

AI revolutionizes testing by moving from reactive bug discovery to predictive quality assurance. Machine learning models identify high-risk areas before testing even begins.

Testing transformation steps:

- Implement automated test case generation based on requirements and design documents

- Deploy machine learning models to identify high-risk code areas pre-testing

- Set up intelligent test execution with AI-prioritized test cases

- Configure automated regression testing that triggers on code changes

Game-changer insight: AI-powered test case generation creates 40% more comprehensive test scenarios than manual approaches, often identifying edge cases that human testers overlook.

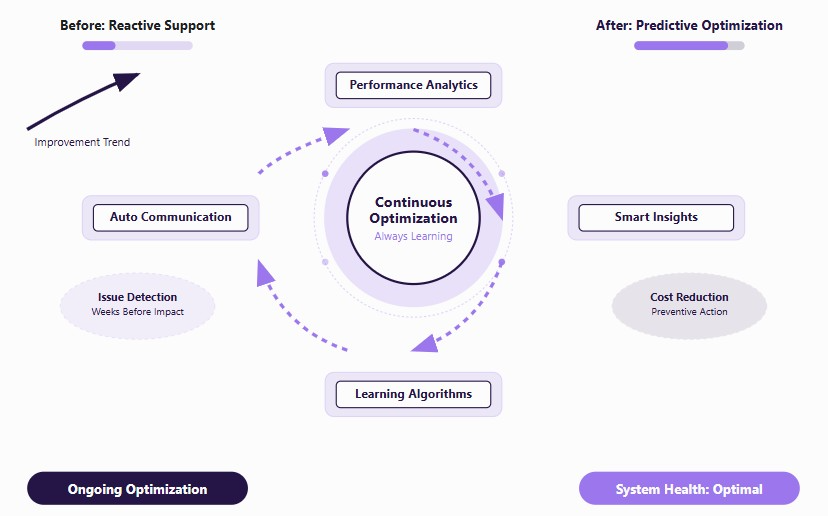

Step 6: Maintenance phase AI optimization (ongoing)

The maintenance phase shifts from reactive support to predictive optimization. AI continuously monitors system performance and recommends improvements before issues impact users.

Ongoing optimization elements:

- Deploy predictive analytics dashboards for system performance monitoring

- Set up automated reporting with AI-generated insights and recommendations

- Implement continuous improvement algorithms that analyze project outcomes

- Configure stakeholder communication automation for relevant project updates

Success story: One enterprise client reduced maintenance costs by 45% using predictive analytics to identify system issues weeks before they would have caused downtime.

Step 7: Integration validation and optimization (week 11-12)

The final step ensures your AI integration delivers measurable value and continuous improvement. This validation phase establishes the foundation for scaling AI across all waterfall projects.

Validation framework:

- Compare AI-enhanced metrics against baseline measurements for quantifiable improvement

- Fine-tune machine learning algorithms based on initial results and team feedback

- Provide comprehensive training on new AI-enhanced workflows

- Document updated waterfall methodology that includes AI integration points

Critical success factor: Teams that invest in thorough algorithm fine-tuning during this phase see 25% better performance in subsequent projects. The AI learns your organization's specific patterns and improves its predictions accordingly.

This 12-week integration process, supported by project management software for startups, balances thorough planning with rapid value delivery. Most organizations see immediate benefits in the requirements and design phases, with compound improvements throughout the project lifecycle.

Establishing AI governance: Essential controls for waterfall project success

When integrating AI into waterfall projects, especially in regulated industries, you need robust governance frameworks that maintain compliance while enabling innovation. Traditional waterfall phase gates must evolve to include AI-specific checkpoints that ensure responsible deployment throughout your sequential workflow.

Effective AI governance prevents costly compliance violations and builds stakeholder confidence in your AI-enhanced processes.

Mapping AI responsibilities to waterfall artifacts

Your existing waterfall documentation becomes the foundation for AI accountability. Each traditional artifact now requires AI-specific components that track model decisions and validate outcomes.

Essential AI documentation integration:

- Software requirements specification (SRS) - Include AI model requirements, data sources, and performance thresholds

- System design specification (SDS) - Document AI architecture, integration points, and fallback mechanisms

- Test plan documentation - Add AI model validation procedures, bias testing protocols, and performance benchmarks

- Maintenance logs - Track model drift, retraining schedules, and performance degradation alerts

Key insight: Embedding AI documentation within existing waterfall artifacts ensures governance compliance without creating parallel documentation systems that teams might ignore.

RACI matrix for AI-waterfall integration

Clear role definitions prevent accountability gaps that could compromise AI governance or project success. This RACI framework maps specific AI responsibilities to your waterfall team structure.

Critical role assignments:

- Project manager - Responsible for AI integration timeline, accountable for overall governance compliance

- Business analyst - Responsible for AI requirements validation, consulted on model performance criteria

- Quality assurance - Responsible for AI testing protocols, accountable for bias detection and mitigation

- System architect - Responsible for AI technical integration, consulted on model selection decisions

- Data steward - Accountable for data quality and privacy, responsible for training data validation

- Model owner - Responsible for AI model performance, accountable for ongoing model maintenance

- Risk officer - Consulted on AI risk assessment, informed of model deployment decisions

Pro tip: Assign dual accountability for critical AI decisions between technical and business stakeholders. This prevents purely technical decisions that ignore business risk or business decisions that ignore technical constraints.

Phase-gate checklists for AI compliance

Each waterfall phase gate requires AI-specific checkpoints that validate responsible deployment before proceeding to the next phase. These checklists ensure systematic governance without slowing project momentum.

Requirements phase checklist:

- Bias impact assessment completed and documented

- Data privacy compliance verified for all AI training sources

- Model explainability requirements defined and approved

Design phase checklist:

- AI architecture review completed by the security team

- Model validation framework designed and approved

- Fallback procedures documented for AI system failures

Implementation phase checklist:

- Model performance meets defined thresholds in the test environment

- Bias testing completed with acceptable results documented

- Explainability documentation generated and reviewed by stakeholders

Testing phase checklist:

- End-to-end AI system validation completed successfully

- User acceptance testing includes AI-specific scenarios

- Risk mitigation plans tested and validated

Deployment phase checklist:

- Final model performance sign-off from all RACI stakeholders

- Monitoring dashboards configured for ongoing AI governance

- Emergency rollback procedures tested and documented

This systematic approach to AI governance transforms potentially chaotic AI integration into a controlled, compliant process that regulatory bodies can easily audit and stakeholders can confidently approve.

Proving AI value: Measurable ROI and performance tracking for waterfall projects

Executive leadership demands quantifiable evidence that AI integration delivers tangible business value. This comprehensive ROI framework and KPI dashboard provide the metrics needed to justify AI investment and track ongoing performance improvements.

Transform your AI initiative from a cost center into a proven value driver with measurable outcomes.

Cost-benefit analysis framework

Understanding the complete financial picture requires mapping all implementation costs against measurable benefits. This balanced approach ensures realistic ROI expectations and proper budget allocation.

Implementation cost buckets:

- AI tool licensing - Platform subscriptions (Microsoft Project AI, ClickUp Brain, predictive analytics tools)

- Data preparation work - Historical data cleaning, integration setup, and quality validation

- Team training investment - Skills development, certification programs, and change management support

- Technical integration - API connections, workflow customization, and system configuration

Quantifiable benefit categories:

- Cycle-time reduction - Faster project completion through automated scheduling and bottleneck prediction

- Rework elimination - Fewer design changes and requirement gaps caught early by AI analysis

- Defect prevention - Reduced post-deployment issues through predictive quality assurance

- Resource optimization - Better capacity planning and skill matching for improved productivity

Financial reality check: Most organizations see break-even within 6-8 months when properly implemented, with full ROI realized by month 12 through compound improvements across multiple projects.

Essential KPIs for AI-waterfall success

These performance indicators provide real-time visibility into AI integration effectiveness and help identify optimization opportunities before they impact project outcomes.

Core performance metrics:

- Schedule variance - Percentage difference between AI-predicted and actual project timelines

- Defect leakage rate - Number of post-deployment issues per thousand lines of code or feature points

- Review effort saved - Hours reduced in manual code reviews, design validations, and requirement analysis

- Mean time to resolution (MTTR) - Average time to identify and fix issues in the maintenance phase

Leading indicators:

- Model prediction accuracy trends

- Team adoption rates of AI recommendations

- Data quality scores for training algorithms

- Stakeholder satisfaction with AI-generated insights

Pro tip: Track adoption resistance metrics alongside performance indicators. Low tool usage often signals training gaps or process friction that can derail your entire AI initiative.

This data-driven approach to measuring AI integration success provides the concrete evidence executives need to support continued investment and expansion of AI capabilities across additional waterfall projects.

Bringing intelligence and foresight to waterfall delivery

Integrating AI into the waterfall isn’t about replacing structure; it’s about elevating every phase with foresight, automation, and accuracy. From sharper requirements and smarter design to predictive testing and proactive maintenance, AI transforms waterfall into a framework that delivers with speed and confidence.

The real advantage lies in cutting rework, proving ROI, and future-proofing delivery. Start small, scale responsibly, and let AI turn waterfall from rigid and reactive into intelligent and unstoppable.

%20(1).jpg)

_light%201.png)